About Me

I am a Ph.D. student in robotics at the Robotic Systems Lab, ETH Zurich supervised by Prof. Marco Hutter. My focus is on Foundational models for Perception and Navigation for legged robots in the wild.

Previously, I obtained my MSc. in Robotics, Systems and Control at ETH Zurich in 2024 after which I joined RSL as a Research Engineer. I did my master’s thesis at NASA Jet Propulsion Laboratory (JPL), CA, USA working on traversability mapping for offroad environments.

Prior to this I have worked on projects involving Neural implicit SLAM, high speed motion estimation, Collaborative Visual Inertial SLAM and Lidar guided Object detection. I have had the opportunity to work with a diverse range of sensors ranging from dynamic vision sensor (event camera), RGB/RGBD/Stereo cameras to LiDARs and IMUs. I have experience working on different robotic platforms including legged robots (ANYmal C, D), wheeled ground robots (Polaris RZR Dune Buggy, SuperMegaBot, Clearpath Husky UGV) and flying robots (quadcopters, hexacopters).

Education

2025 - present

ETH Zurich, Switzerland

Ph.D. in Robotics -- ongoing

2021 - 2024

ETH Zurich, Switzerland

Master of Science (MSc.) in Robotics, Systems, and Control

2017 - 2021

Indian Institute of Technology Kharagpur, India

Bachelor of Technology (B.Tech.) in Mechanical Engineer with Micro-specialisation in Entrepreneurship and Innovation

Internships

Sep 2023 - Apr 2024

Perception Systems (347J), NASA Jet Propulsion Laboratory, Pasadena, USA

Master's Thesis

Project: Bird's Eye View Learning for Traversability Mapping of Offroad Environments

Sep 2022 - Feb 2023

Sony RDC, Zurich. Switzerland

Computer Vision Intern

Project: High Speed Motion Estimation using Event-based Vision

May 2020 - Apr 2021

Robot Perception Group, Max Planck Institute for Intelligent Systems, Germany

Bachelor's Thesis (Remote)

Project: Mapping of Archaeological Sites using UAVs

Teaching

Mar 2023 - Jul 2023

Teaching Assistant, Robotics Summer School, ETH Zurich

Teaching assistant for the annual Robotics Summer School organized by RobotX

Research

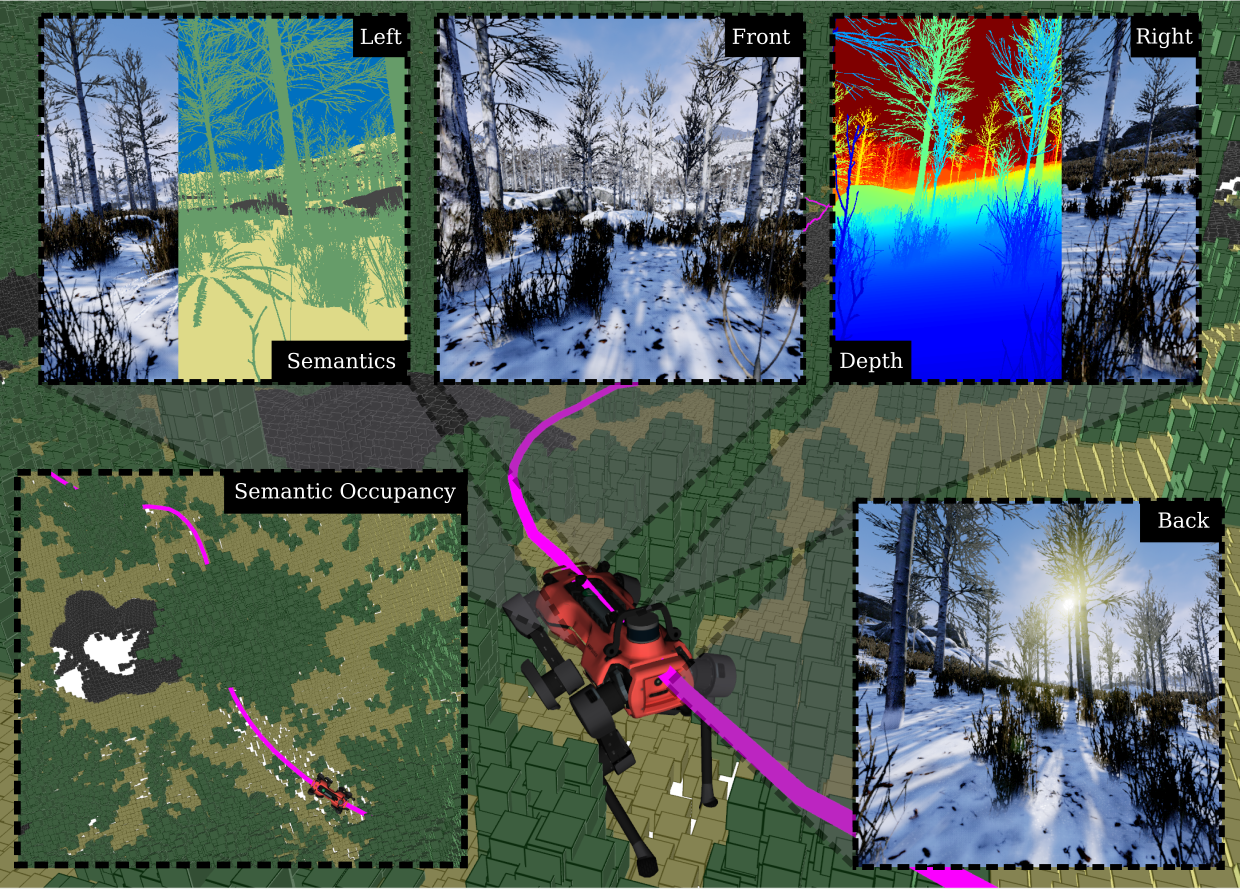

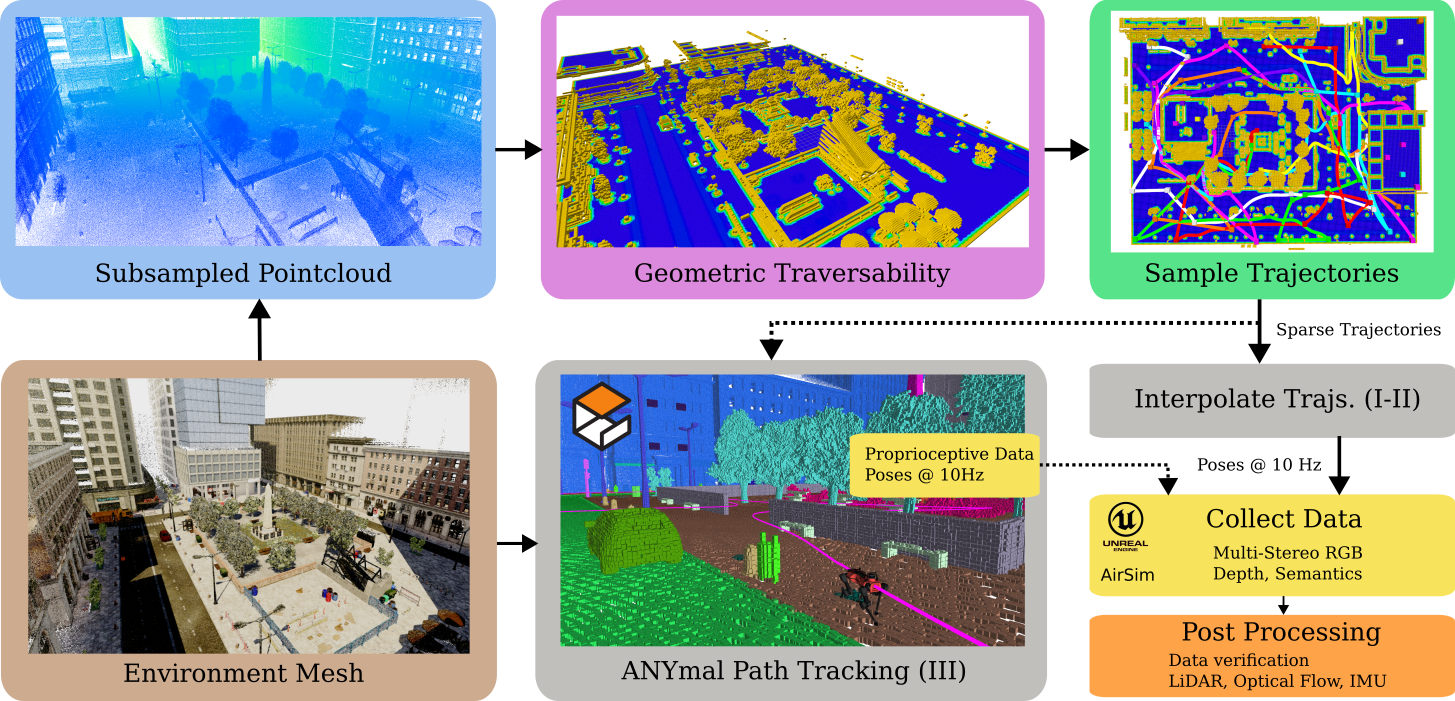

TartanGround: A Large-Scale Dataset for Ground Robot Perception and Navigation

Manthan Patel, Fan Yang, Yuheng Qiu, Cesar Cadena, Sebastian Scherer, Marco Hutter, Wenshan Wang

IEEE International Conference on Intelligent Robots and Systems (IROS) 2025

We present TartanGround, a large-scale, multi-modal dataset to advance the perception and autonomy of ground robots operating in diverse environments. This dataset, collected in various photorealistic simulation environments includes multiple RGB stereo cameras for 360-degree coverage, along with depth, optical flow, stereo disparity, LiDAR point clouds, ground truth poses, semantic segmented images, and occupancy maps with semantic labels. Data is collected using an integrated automatic pipeline, which generates trajectories mimicking the motion patterns of various ground robot platforms, including wheeled and legged robots. We collect 910 trajectories across 70 environments, resulting in 1.5 million samples. Evaluations on occupancy prediction and SLAM tasks reveal that state-of-the-art methods trained on existing datasets struggle to generalize across diverse scenes. TartanGround can serve as a testbed for training and evaluation of a broad range of learning-based tasks, including occupancy prediction, SLAM, neural scene representation, perception-based navigation, and more, enabling advancements in robotic perception and autonomy towards achieving robust models generalizable to more diverse scenarios.

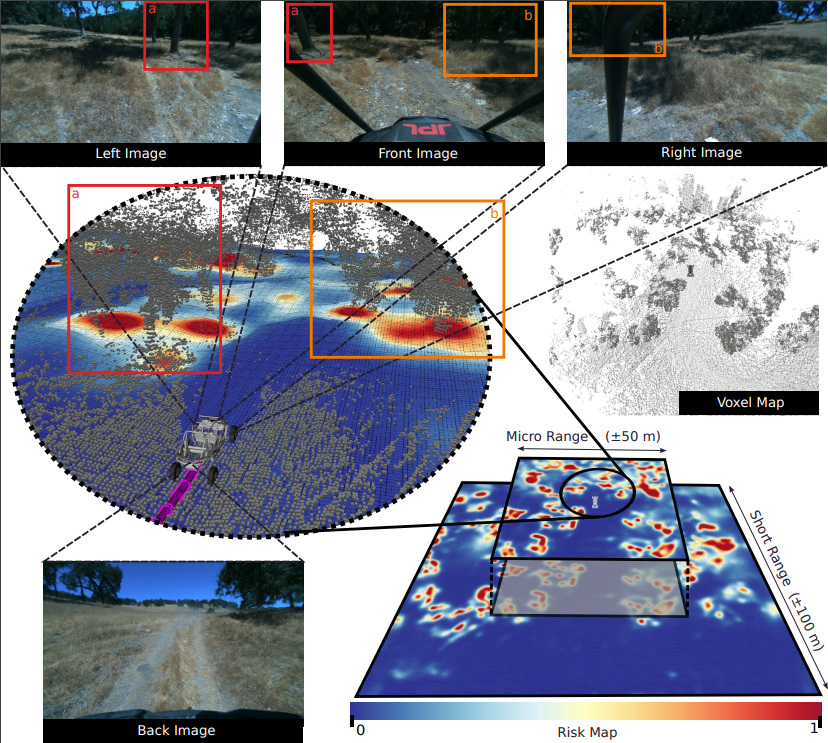

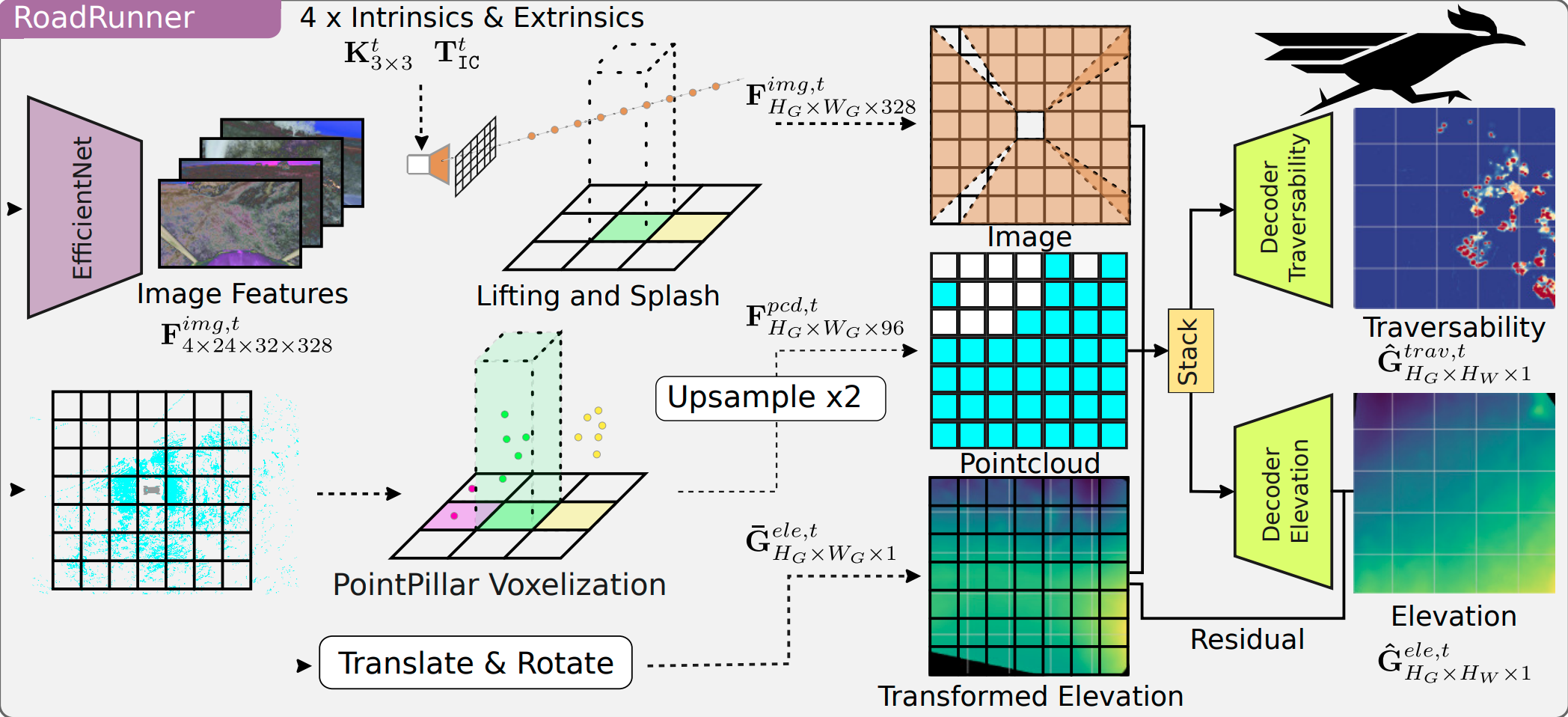

RoadRunner M&M -- Learning Multi-range Multi-resolution Traversability Maps for Autonomous Off-road Navigation

Manthan Patel, Jonas Frey, Deegan Atha, Patrick Spieler, Marco Hutter, Shehryar Khattak

IEEE RA-L 2024

Autonomous robot navigation in off-road environments requires a comprehensive understanding of the terrain geometry and traversability. The degraded perceptual conditions and sparse geometric information at longer ranges make the problem challenging especially when driving at high speeds. Furthermore, the sensing-to-mapping latency and the look-ahead map range can limit the maximum speed of the vehicle. Building on top of the recent work RoadRunner, in this work, we address the challenge of long-range (100 m) traversability estimation. Our RoadRunner (M&M) is an end-to-end learning-based framework that directly predicts the traversability and elevation maps at multiple ranges (50 m, 100 m) and resolutions (0.2 m, 0.8 m) taking as input multiple images and a LiDAR voxel map. Our method is trained in a self-supervised manner by leveraging the dense supervision signal generated by fusing predictions from an existing traversability estimation stack (X-Racer) in hindsight and satellite Digital Elevation Maps. RoadRunner M&M achieves a significant improvement of up to 50% for elevation mapping and 30% for traversability estimation over RoadRunner, and is able to predict in 30% more regions compared to X-Racer while achieving real-time performance. Experiments on various out-of-distribution datasets also demonstrate that our data-driven approach starts to generalize to novel unstructured environments. We integrate our proposed framework in closed-loop with the path planner to demonstrate autonomous high-speed off-road robotic navigation in challenging real-world environments.

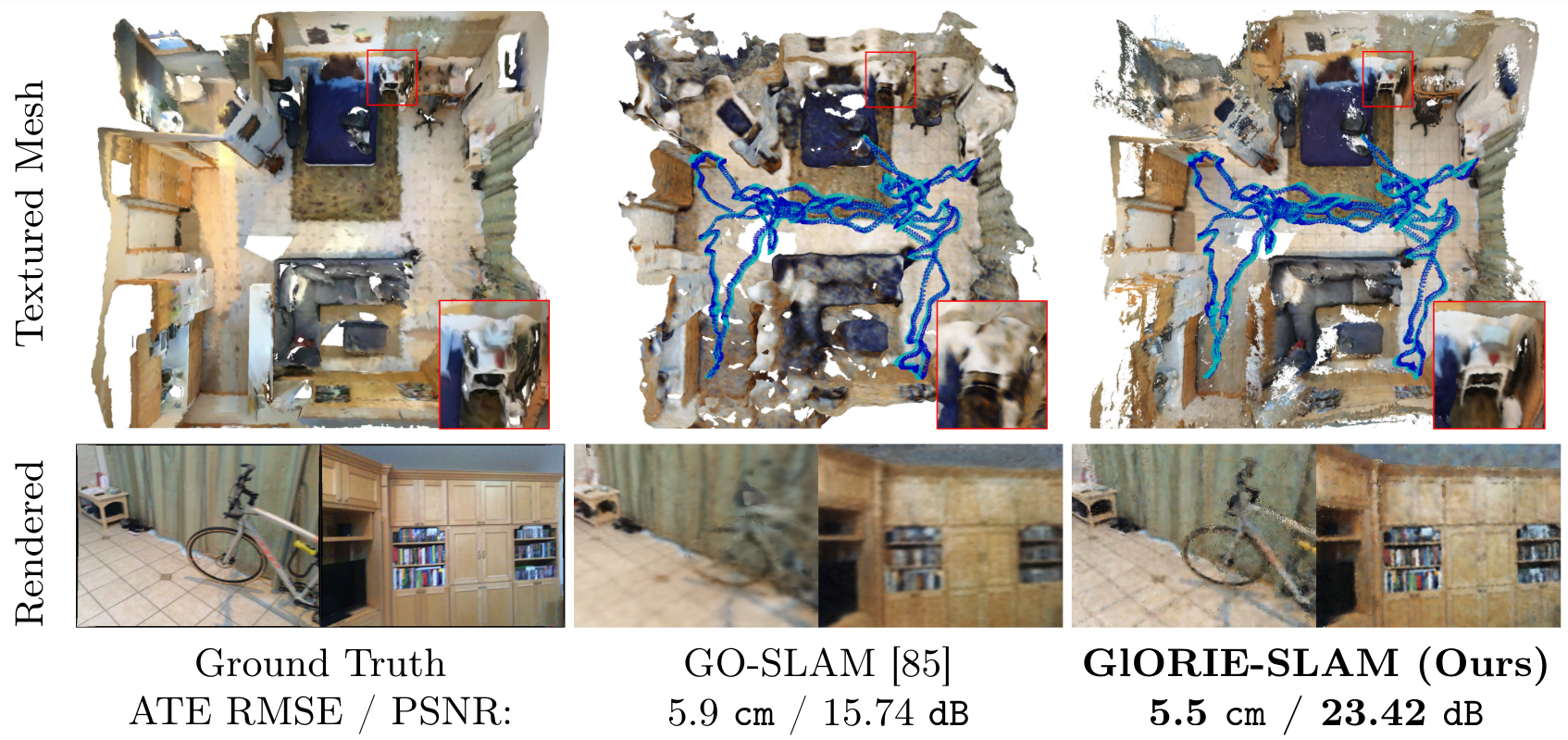

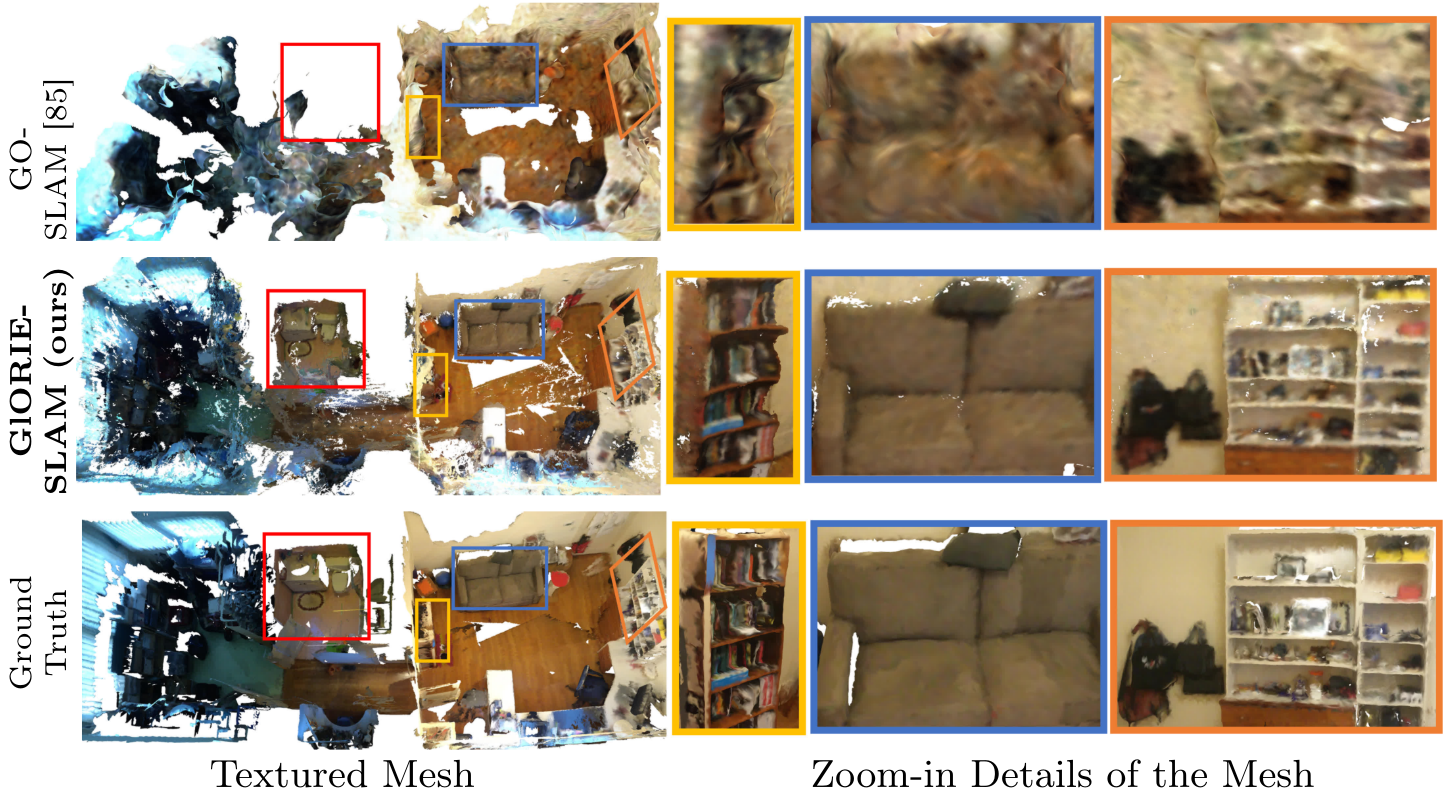

GlORIE-SLAM: Globally Optimized RGB-only Implicit Encoding Point Cloud SLAM

Ganlin Zhang*, Erik Sandström*, Youmin Zhang, Manthan Patel, Luc Van Gool, Martin R. Oswald

Under review for IEEE RA-L

Recent advancements in RGB-only dense Simultaneous Localization and Mapping (SLAM) have predominantly utilized grid-based neural implicit encodings and/or struggle to efficiently realize global map and pose consistency. To this end, we propose an efficient RGB-only dense SLAM system using a flexible neural point cloud scene representation that adapts to keyframe poses and depth updates, without needing costly backpropagation. Another critical challenge of RGB-only SLAM is the lack of geometric priors. To alleviate this issue, with the aid of a monocular depth estimator, we introduce a novel DSPO layer for bundle adjustment which optimizes the pose and depth of keyframes along with the scale of the monocular depth. Finally, our system benefits from loop closure and online global bundle adjustment and performs either better or competitive to existing dense neural RGB SLAM methods in tracking, mapping and rendering accuracy on the Replica, TUM-RGBD and ScanNet datasets.

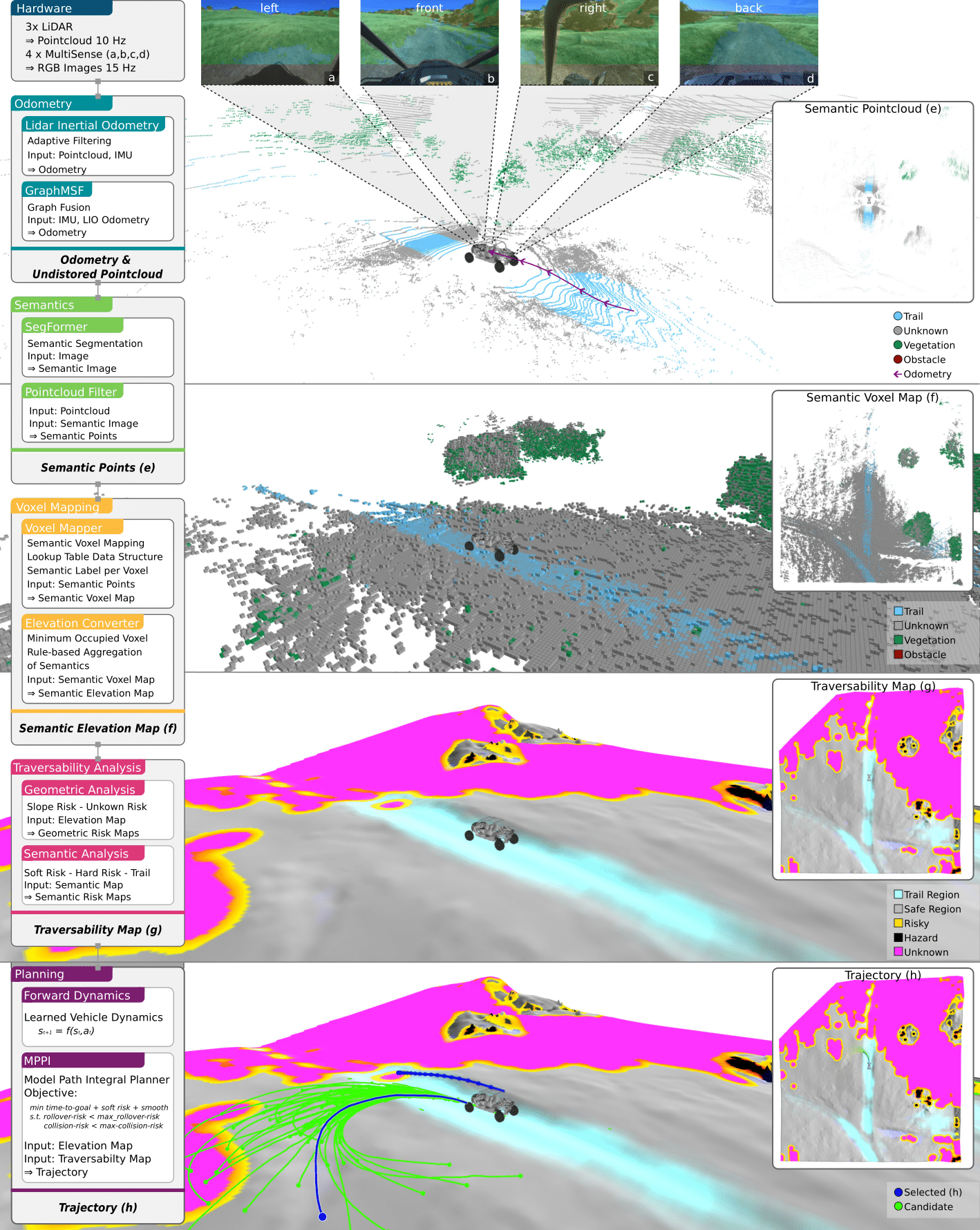

RoadRunner - Learning Traversability Estimation for Autonomous Off-road Driving

Jonas Frey, Manthan Patel, D Atha, J Nubert, D Fan, A Agha, C Padgett, M Hutter, P Spieler, S Khattak

IEEE Transactions on Field Robotics (T-FR)

Autonomous navigation at high speeds in off-road environments necessitates robots to comprehensively understand their surroundings using onboard sensing only. The extreme conditions posed by the off-road setting can cause degraded camera image quality due to poor lighting and motion blur, as well as limited sparse geometric information available from LiDAR sensing when driving at high speeds. In this work, we present RoadRunner, a novel framework capable of predicting terrain traversability and an elevation map directly from camera and LiDAR sensor inputs. RoadRunner enables reliable autonomous navigation, by fusing sensory information, handling of uncertainty, and generation of contextually informed predictions about the geometry and traversability of the terrain while operating at low latency. In contrast to existing methods relying on classifying handcrafted semantic classes and using heuristics to predict traversability costs, our method is trained end-to-end in a self-supervised fashion. The RoadRunner network architecture builds upon popular sensor fusion network architectures from the autonomous driving domain, which embed LiDAR and camera information into a common Bird's Eye View perspective. Training is enabled by utilizing an existing traversability estimation stack to generate training data in hindsight in a scalable manner from real-world off-road driving datasets. Furthermore, RoadRunner improves the system latency by a factor of roughly 4, from 500 ms to 140 ms, while improving the accuracy for traversability costs and elevation map predictions. We demonstrate the effectiveness of RoadRunner in enabling safe and reliable off-road navigation at high speeds in multiple real-world driving scenarios through unstructured desert environments.

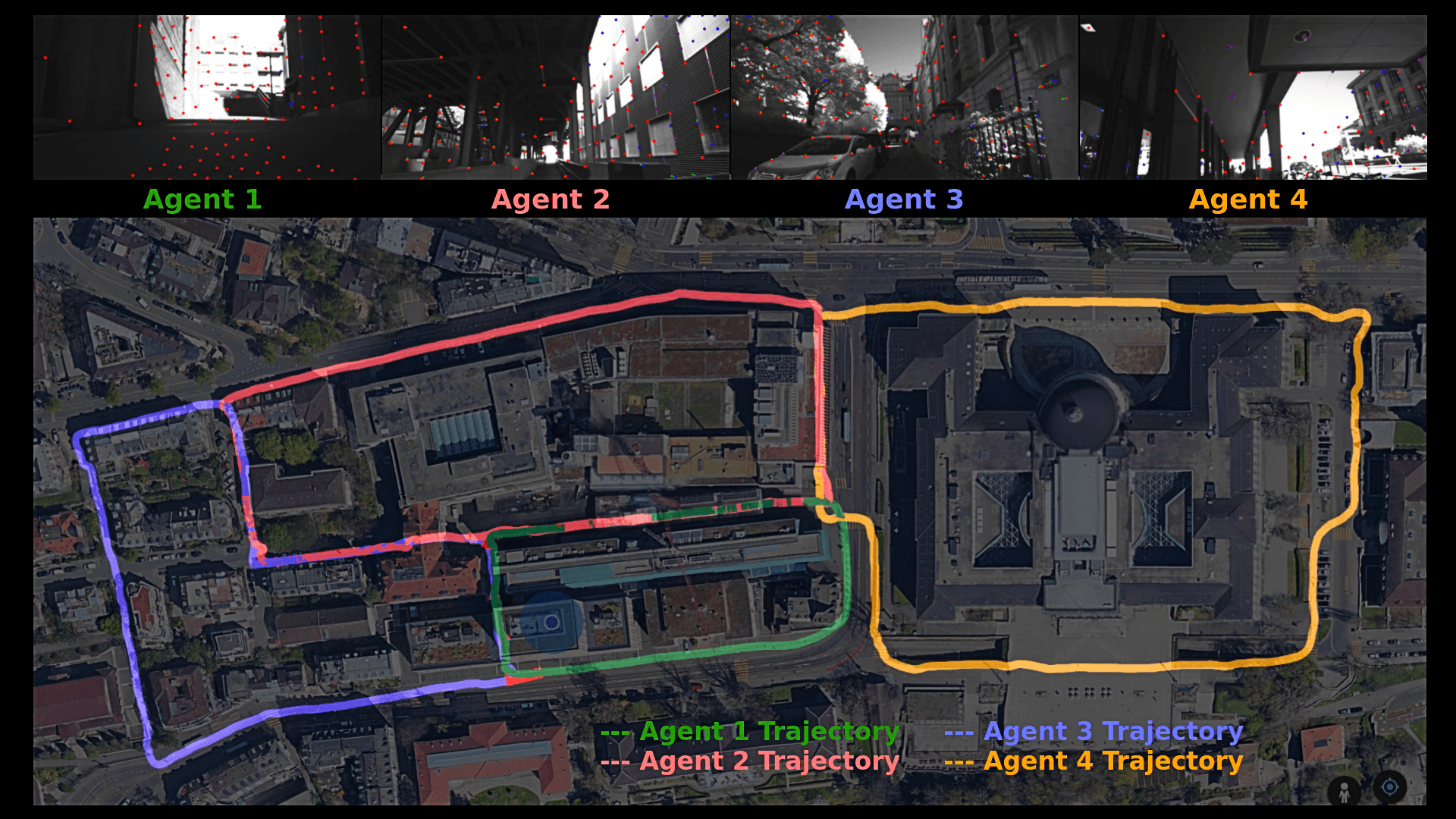

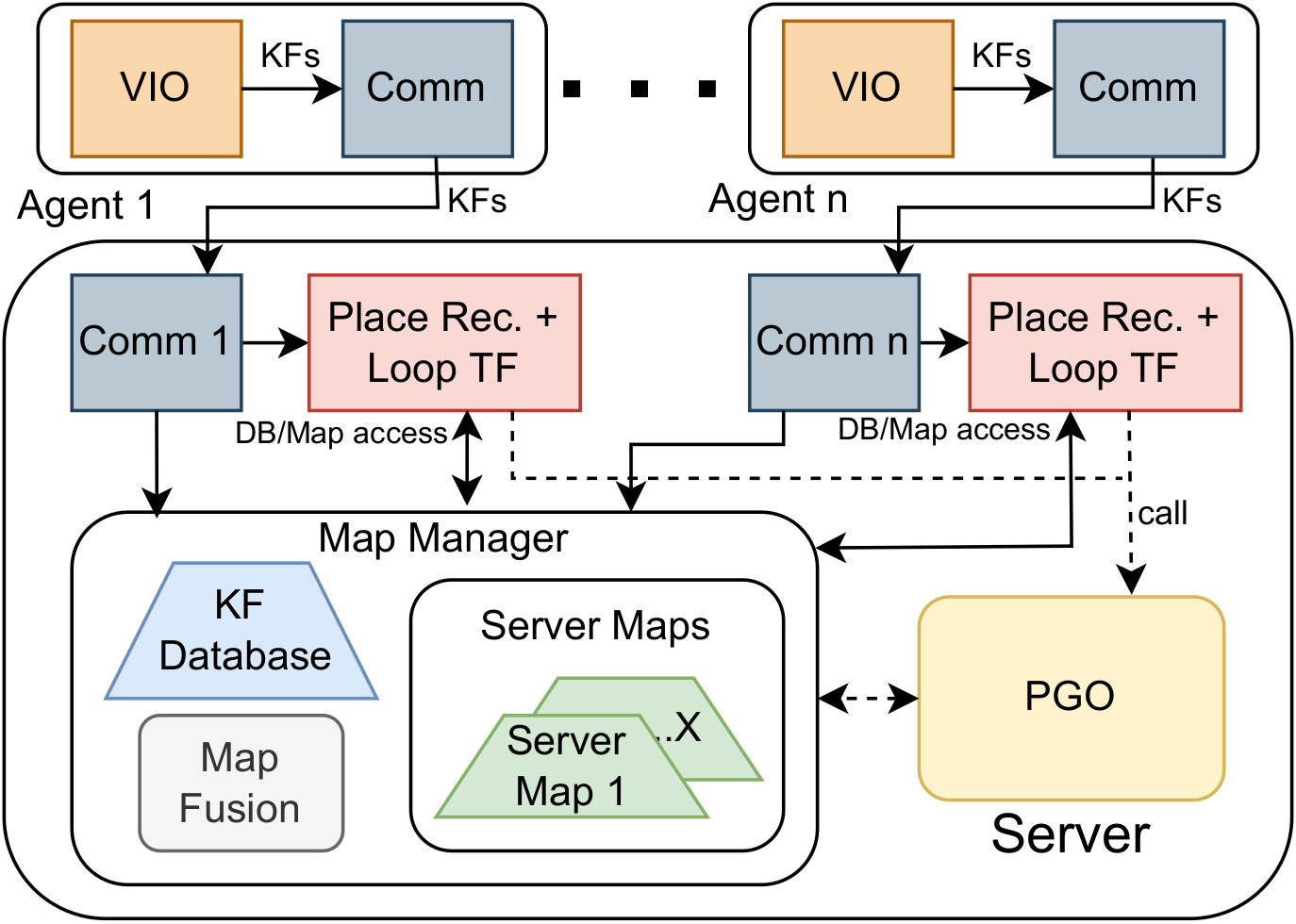

COVINS-G: A Generic Back-end for Collaborative Visual-Inertial SLAM

Manthan Patel, Marco Karrer, Philipp Bänninger, Margarita Chli

International Conference on Robotics and Automation (ICRA), 2023

Collaborative SLAM is essential for multi-robot systems, enabling co-localization in a common reference frame crucial for coordination. Traditionally, a centralized architecture uses agents with onboard Visual-Inertial Odometry (VIO), communicating data to a central server for map fusion and optimization. However, this approach's flexibility is constrained by the VIO front-end choice. Our work introduces COVINS-G, a generalized back-end building on COVINS, making the server compatible with any VIO front-end, including off-the-shelf cameras like Realsense T265. The COVINS-G back-end deploys a multi-camera relative pose estimation algorithm for computing the loop-closure constraints allowing the system to work purely on 2D image data. In the experimental evaluation, we show on-par accuracy with state-of-the-art multi-session and collaborative SLAM systems, while demonstrating the flexibility and generality of our approach by employing different front-ends onboard collaborating agents within the same mission. The COVINS-G codebase, including a generalized ROS-based front-end wrapper, is open-sourced.

[Arxiv] | [Paper] | [Presentation] | [Experiments] | [GitHub]

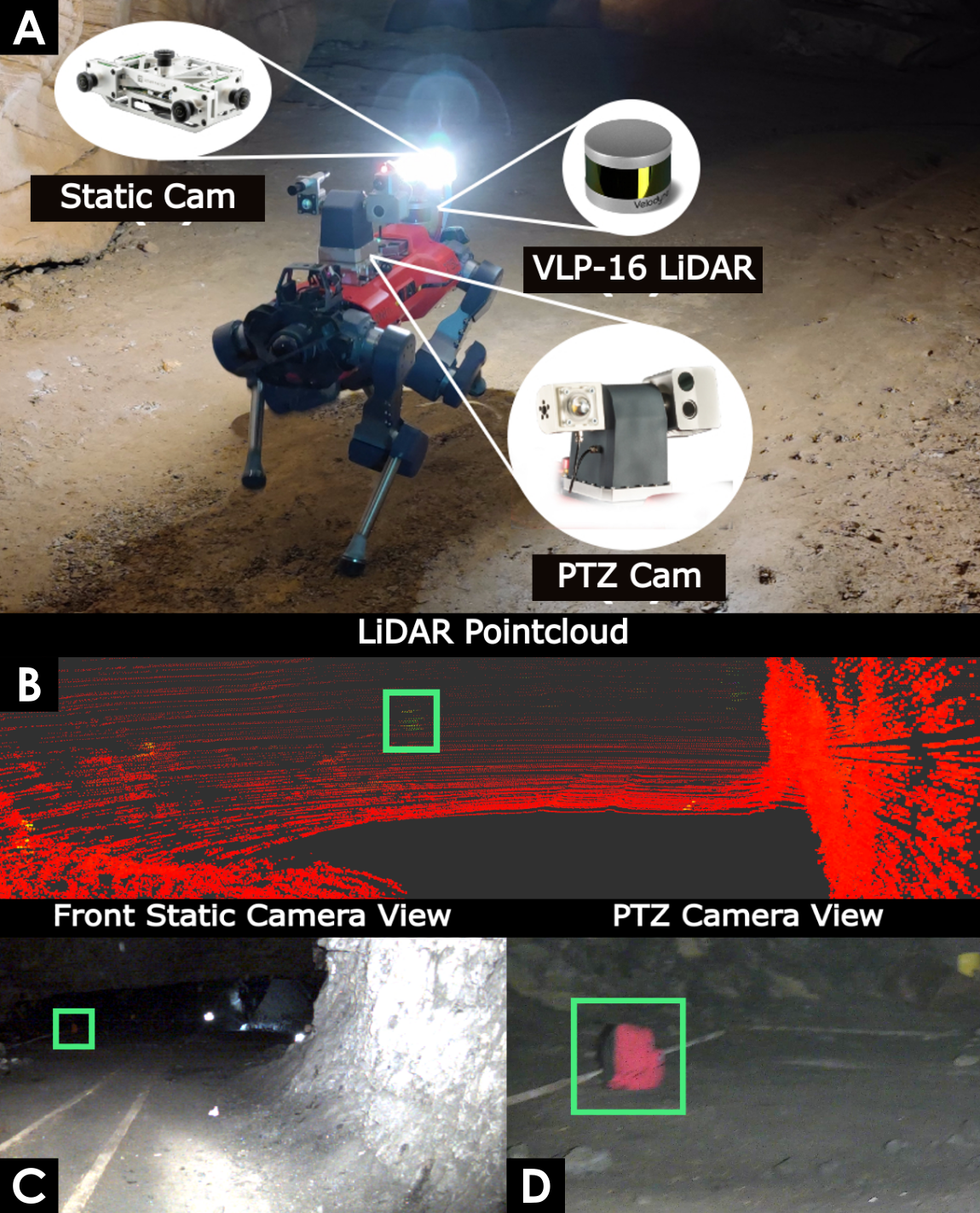

LiDAR-guided object search and detection in Subterranean Environments

Manthan Patel, Gabriel Waibel, Shehryar Khattak, Marco Hutter

IEEE International Symposium on Safety, Security and Rescue Robotics (SSRR) 2022

Detecting objects of interest, such as human survivors, safety equipment, and structure access points, is critical to any search-and-rescue operation. Robots deployed for such time-sensitive efforts rely on their onboard sensors to perform their designated tasks. However, as disaster response operations are predominantly conducted under perceptually degraded conditions, commonly utilized sensors such as visual cameras and LiDARs suffer in terms of performance degradation. In response, this work presents a method that utilizes the complementary nature of vision and depth sensors to leverage multi-modal information to aid object detection at longer distances. In particular, depth and intensity values from sparse LiDAR returns are used to generate proposals for objects present in the environment. These proposals are then utilized by a Pan-Tilt-Zoom (PTZ) camera system to perform a directed search by adjusting its pose and zoom level for performing object detection and classification in difficult environments. The proposed work has been thoroughly verified using an ANYmal quadruped robot in underground settings and on datasets collected during the DARPA Subterranean Challenge finals.

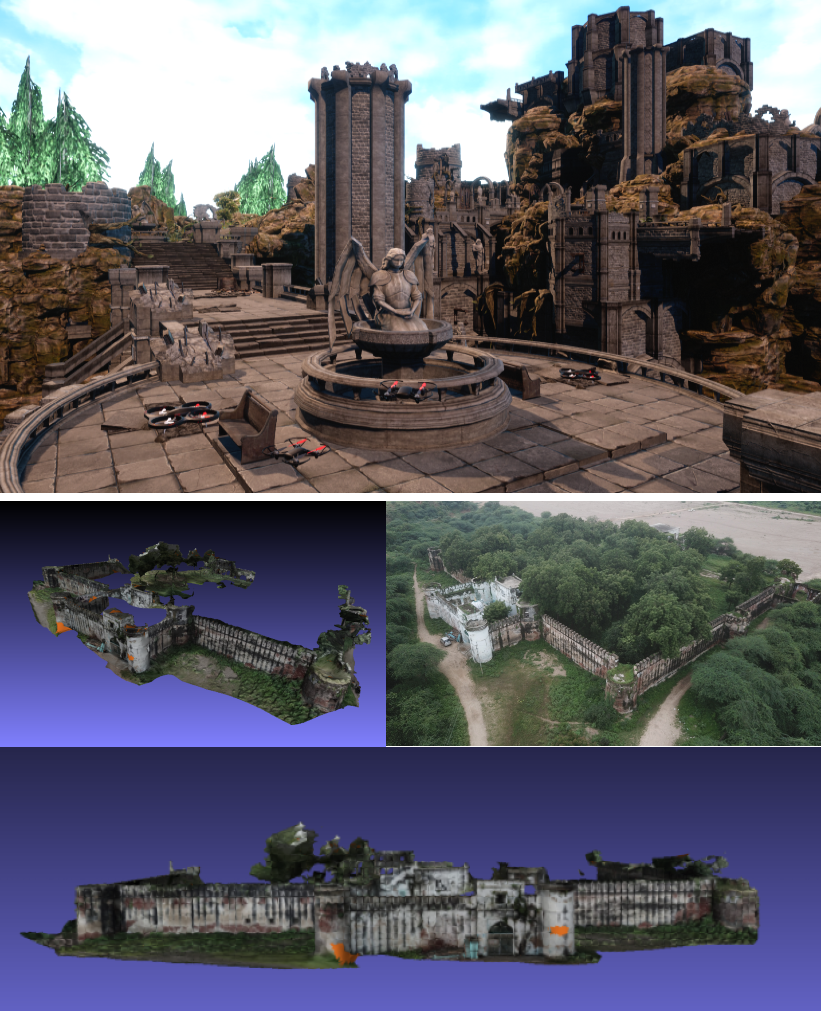

Collaborative Mapping of Archaeological Sites using multiple UAVs

Manthan Patel, Aditya Bandopadhyay, Aamir Ahmad

International Conference on Intelligent Autonomous Systems (IAS 16), 2021

UAVs have found an important application in archaeological mapping. Majority of the existing methods employ an offline method to process the data collected from an archaeological site. They are time-consuming and computationally expensive. In this paper, we present a multi-UAV approach for faster mapping of archaeological sites. Employing a team of UAVs not only reduces the mapping time by distribution of coverage area, but also improves the map accuracy by exchange of information. Through extensive experiments in a realistic simulation (AirSim), we demonstrate the advantages of using a collaborative mapping approach. We then create the first 3D map of the Sadra Fort, a 15th Century Fort located in Gujarat, India using our proposed method. Additionally, we present two novel archaeological datasets recorded in both simulation and real-world to facilitate research on collaborative archaeological mapping. For the benefit of the community, we make the AirSim simulation environment, as well as the datasets publicly available.

Awards and Achievements

Sep 2023 - Apr 2024

JPL Visiting Student Research Program

JPL Visiting Student Research Fellow for conducting my master's thesis at NASA JPL, Pasadena, CA

Sep 2021 - Mar 2023

ETH D-Mavt Scholarship

Full, merit-based scholarship awarded to less than 2.5% of all D-Mavt Masters students at ETH Zurich

Dec 2021

Dr. B C Roy Memorial Gold Medal

Adjudged best all-rounder (Academic and extra-curriculars) among all graduating (1400) B.Tech students at IIT Kharagpur

Dec 2021

Institute Silver Medal (Academic Rank 1)

Highest Cumulative Grade in Mechanical Engineering among all graduating B.Tech students at IIT Kharagpur

Apr 2020 - Dec 2020

DAAD WISE Scholarship

Recipient of the prestigious scholarship to perform a research internship at a German Research Institute (MPI-IS)

2018 - 2020

Inter-IIT Tech Meet 7.0, 8.0 and 9.0

Represented IIT Kharagpur three consecutive times in Inter-IIT Tech events for problem statements invovling autonomous agricultural robot, multi-UAV search mission and UAV-based Sub-terranean exploration mission.

Results: Second Runner Up (2018), First Runner Up (2019) and Winner (2020)

Oct 2020

OP Jindal Engineering and Management Scholarship

Recipient of the scholarship awarded to 100 students across India for academic and leadership excellence

Jun 2019

27th Intelligent Ground Vehicle Competition (IGVC)

Secured the second position in the AutoNav Challenge among 40+ participating international teams at Michigan, USA